Simple, Locally Hosted LLM Chatbots - With Memory!

I used Ollama, Python, and simple txt files to create private, locally hosted chatbots with memory.(Github)

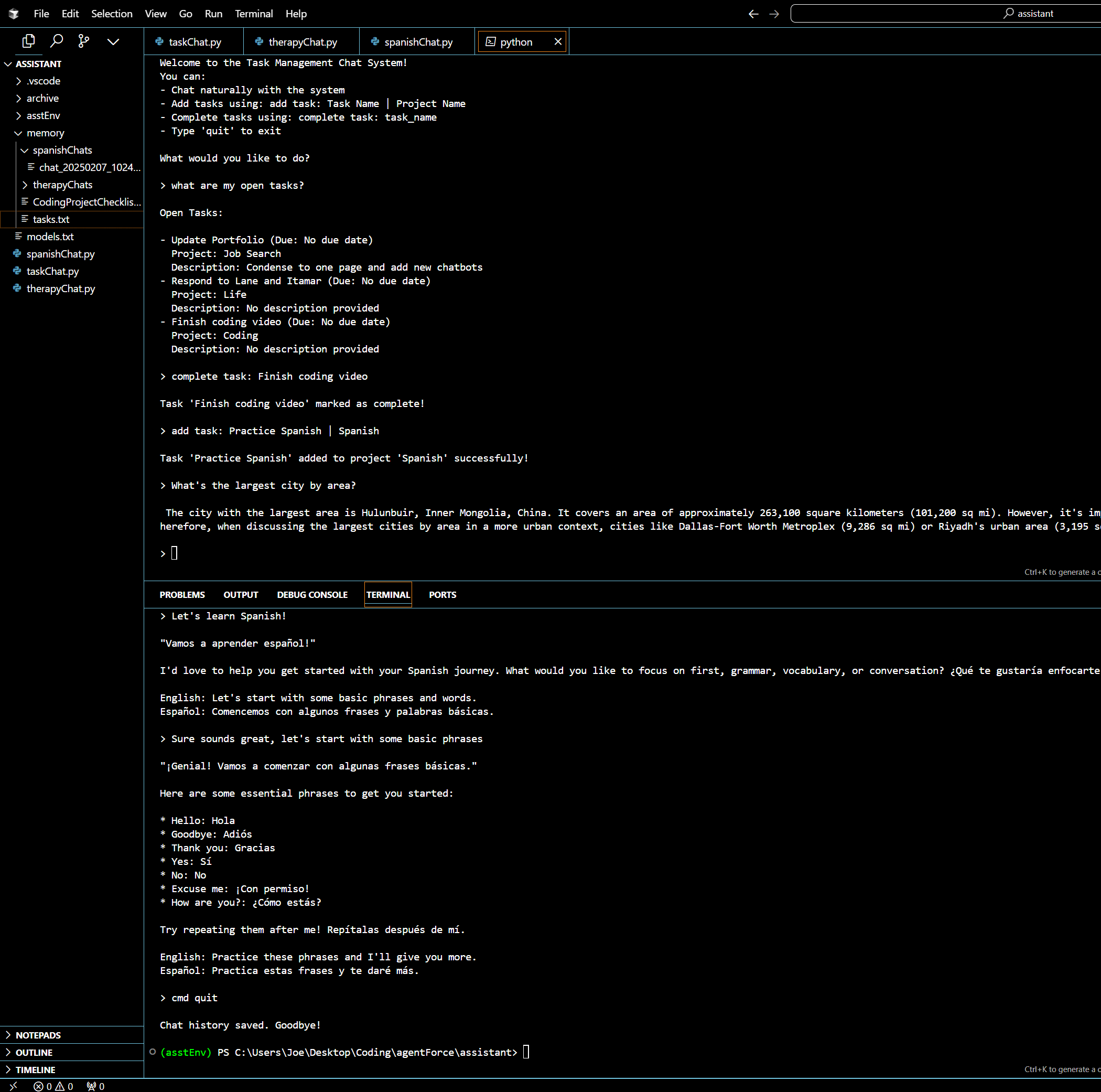

In the video below you'll see two basic chatbots:

- A personal assistant that can help you with your tasks and schedule, and answer general questions.

- Specialty bots that save entire chat histories and retain context across spanish lessons, therapy sessions, meal preps and more.

The personal assistant has more complex CRUD commands that can be triggered by specific phrases. These alert the agent to update the task.txt file to create/complete/add/delete tasks. The other chatbots bulk save conversations to 'Memory' when you complete a session ('cmd quit').

This style of script can work with any model downloaded from Ollama as long as your computer can run it. In the video below I am running these on an HP laptop with no GPU.

If you need additional speed, you adjust this to send your requests to a remote API like OpenAI or Anthropic. You can also limit how many past conversations are kept in memory.